As Unity users’ platform needs became more complex, our input system could no longer sufficiently help them easily create and visualize input across multiple platforms and devices. To solve this problem, I designed a new input system and visual interface for how users’ create device and input interactions.

Challenge

Unity’s success as an engine was attributed to its easy, intuitive configuration for mobile and desktop games. It arrived with the advent of the iPhone and was easy to build and ship titles for iOS with. However, as the industry began to shift to cross-platform gaming and more platforms arose, it became challenging for users to create and manage input and interactions across all the devices and platforms.

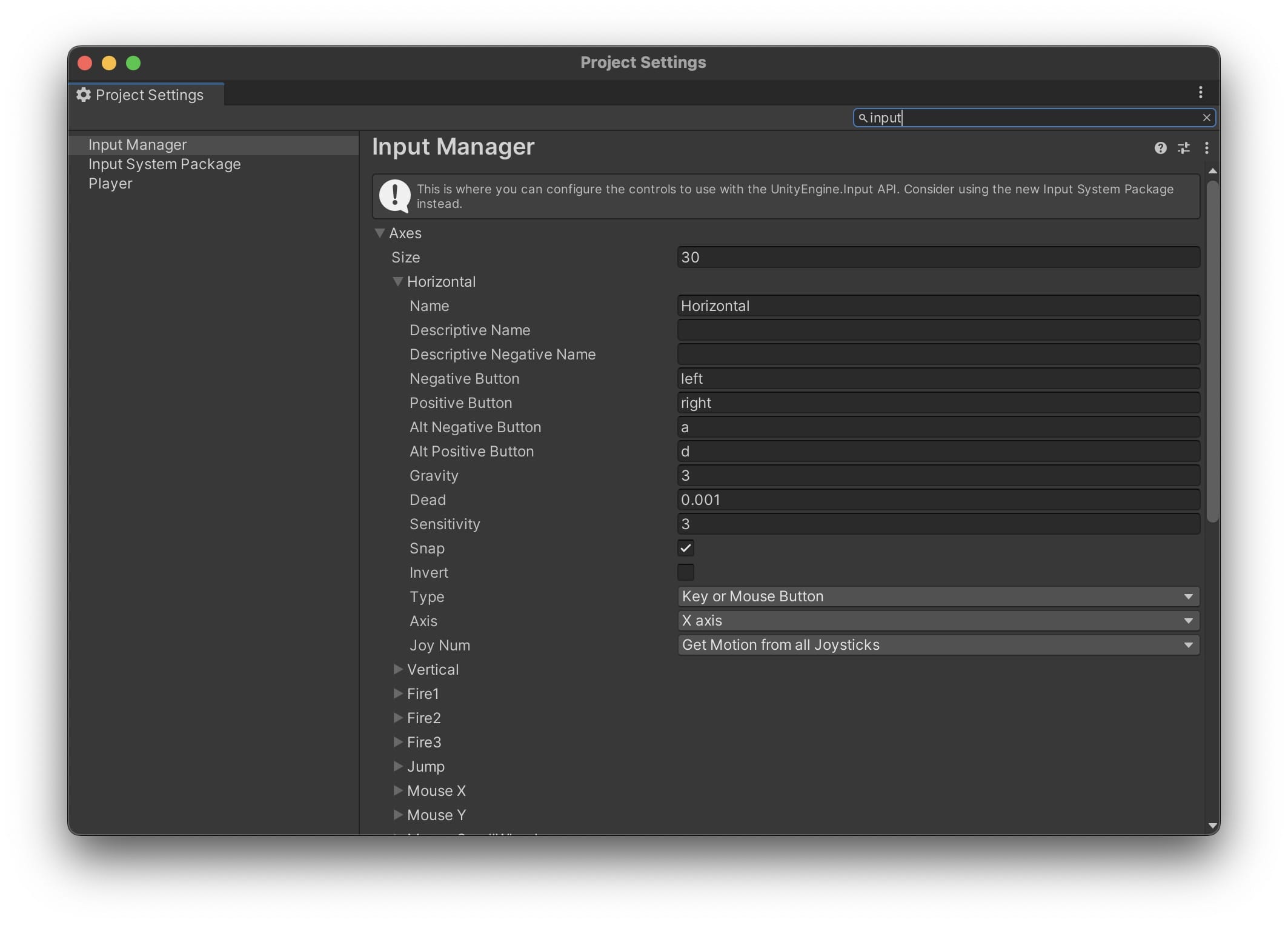

We were presented with two problems. First, the original Input Manager couldn’t scale to meet our users’ growing needs. It was built to solve input for a single platform, lacked customization, and had a rudimentary API for handling input. Second, it was difficult for developers or platform providers to extend as new platforms were integrated into the engine. We needed a solution that could scale as more platforms were added and provide a more intuitive way of creating and managing device input.

Solution

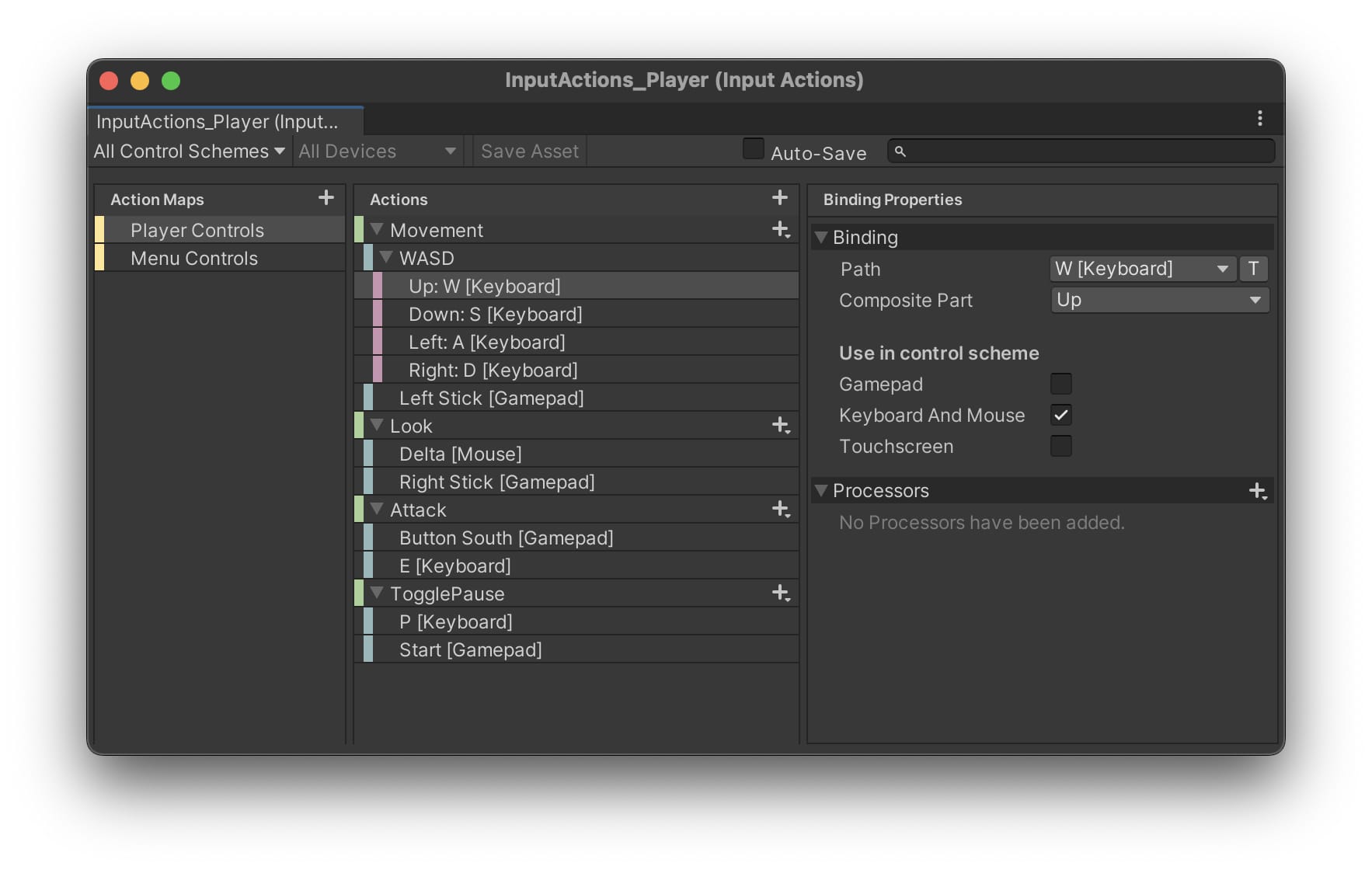

I proposed moving away from the original Input Manager and designing a new visual interface focused on making input and events easier to visualize, create, and manage across many platforms. The new interface let users’ quickly set up input, choose the devices wanted, make contexts for specific input and events, and options for loading and unloading at runtime. This would allow us to follow the asset authoring standards and provide a much more flexible system than the previous preferences based solution.

Input was broken into Action Maps, Actions, Composites, and Bindings. This organization allowed a flexible system for generic devices like gamepads to platform-specific features like 6-degrees-of-freedom input on the Meta Quest Pro or hand tracking on Apple Vision Pro.

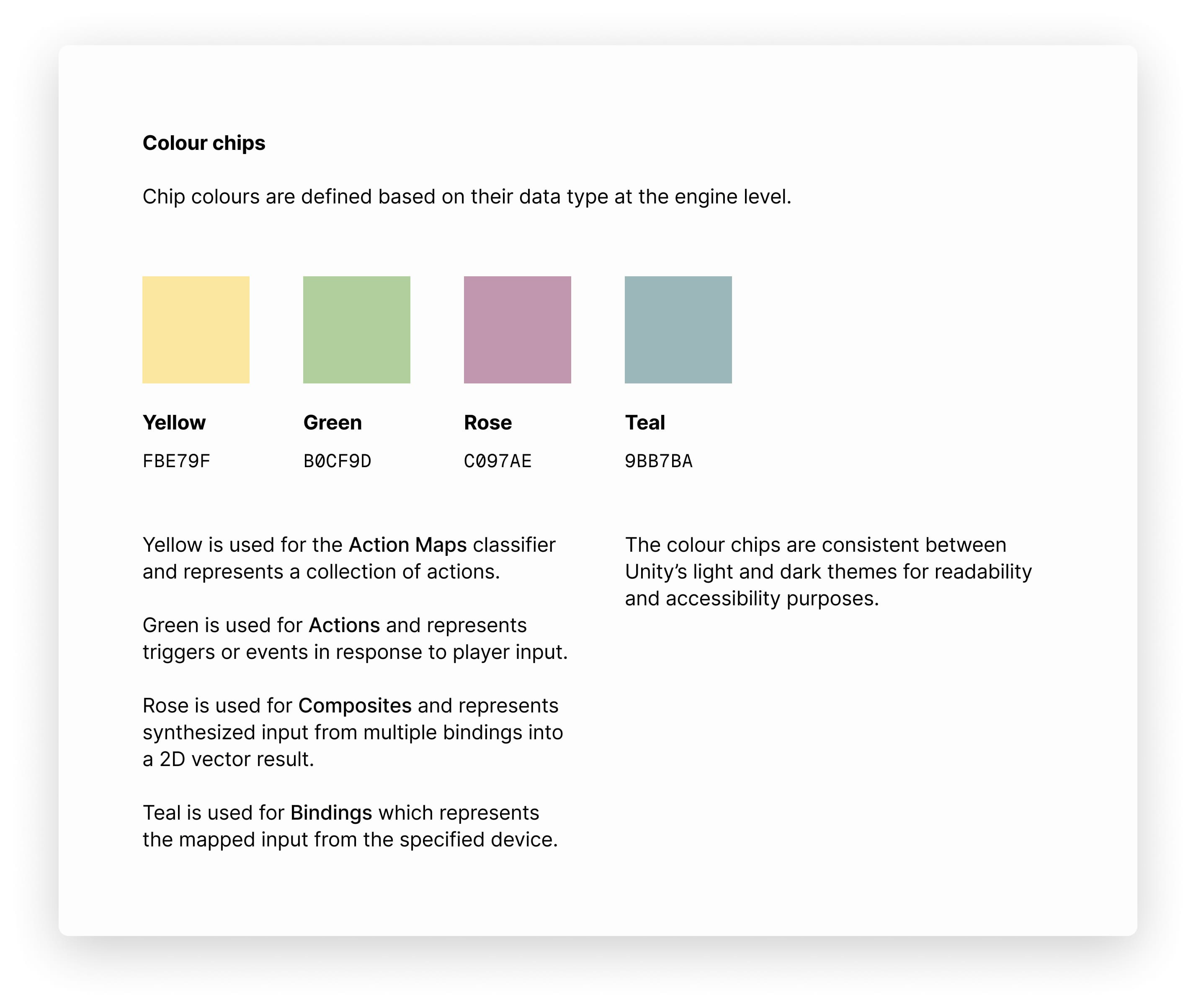

To improve clarity and make it easier to understand the different data types, I designed a colour chip pattern clearly separating elements. Colours were picked for accessibility, readability, and based on user testing we had done previous with an icon-based approached that wasn’t easy to grok.

Futhermore, this simplified design allowed us to create a much cleaner architecture and mapping in C# code when accessing the input system using a Player Controller script.

//INPUT SYSTEM ACTION METHODS --------------

//This is called from PlayerInput; when a joystick or arrow keys has been pushed.

//It stores the input Vector as a Vector3 to then be used by the smoothing function.

public void OnMovement(InputAction.CallbackContext value)

{

Vector2 inputMovement = value.ReadValue<Vector2>();

rawInputMovement = new Vector3(inputMovement.x, 0, inputMovement.y);

}

//This is called from PlayerInput, when a button has been pushed, that corresponds with the 'Attack' action

public void OnAttack(InputAction.CallbackContext value)

{

if(value.started)

{

playerAnimationBehaviour.PlayAttackAnimation();

}

}

//This is called from Player Input, when a button has been pushed, that correspons with the 'TogglePause' action

public void OnTogglePause(InputAction.CallbackContext value)

{

if(value.started)

{

GameManager.Instance.TogglePauseState(this);

}

}

Users can define a single input asset, assign it to a prefab or GameObject, and then instantiate it at runtime, enabling input across multiple devices simultaneously from a single asset. The video below demonstrates input from PlayStation, Xbox, and Nintendo Switch controllers, as well as a keyboard. All of these devices are mapped to a single asset, and game logic is applied to assign UI and shader effects based on which controller is assigned to each character instance.

Impact

The redesigned input system launched on October 14, 2019 and was released to all Unity users’ on July 23, 2020 with the Unity 2020.1 release. Since then, it has served as the main integration for input on major platforms such as Playstation, Xbox, Nintendo Switch, Meta Quest, and many more. It is also the main input system used for Polyspatial and XR Interaction Toolkit, exposing device input, headset tracking, and hand simulation between Unity and Apple Vision Pro.